To say that Nvidia has been intently focused on AI in recent years might be an understatement. The company’s H100 Hopper accelerators are probably the hottest silicon on the planet, and part of the company’s meteoric stock-price rise over the past several years. Plus, each new generation of Nvidia graphics cards features progressively more powerful hardware that, in addition to being optimized for graphics acceleration and gaming, has the side benefit of being the best available consumer-handy gear for accelerating AI operations. That hardware isn’t of much use without software to support it, though, which is where one of Nvidia’s newest projects comes in: Chat With RTX. This software is designed to take advantage of the tensor cores in a typical late-model GeForce RTX card and give you an easy-to-use AI-driven tool for multiple tasks…including running your own local large language model (LLM) with documents and data you provide.Chat With RTX: Support and SetupNvidia’s Chat With RTX software is still in its early stages of development, but beta version 0.2 releases today, February 13. Currently, it offers support only for Nvidia GeForce RTX 30-series and RTX 40-series graphics cards. It’s designed to take advantage of the tensor cores inside of these cards to support various AI-driven functions.

(Credit: PCMag)

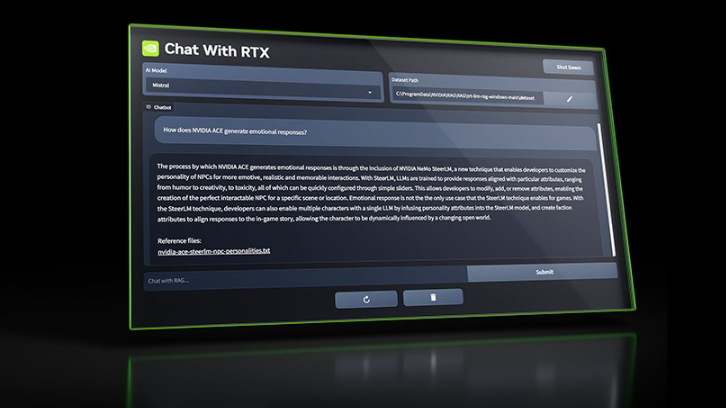

I received this software a few days early so that PCMag could give it a test drive. I installed the software on a PC with an Nvidia GeForce RTX 3080 Founders Edition graphics card. The install went smoothly but took some time; the installer is large. The installation process looks identical to what you see when you install an Nvidia graphics driver, complete with the option to do a clean install. The files we were sent came in at right around 35GB, with a few extra PDFs thrown in as orientation materials.It’s difficult to tell exactly how much space the software consumes at this time, though, as more files are downloaded in the course of the installation process. This is what makes the installation take so long, with Nvidia suggesting it should take on average between 30 minutes to an hour. I clicked away to leave it setting up, and did not record exactly how long this actually took, but it was less than an hour for me. The instructions we received from Nvidia suggest you need to have 100GB of space available, at a minimum.Using Chat With RTXSeveral features come built into the Chat With RTX software, though not all of these appear to be functional at the time of writing. The “RTX” in this case, according to Nvidia, stands for “Real-Time Translation,” which doesn’t make lots of sense given there isn’t an “X” in “Translation.” The real reason for the “RTX” being here, of course, is because Nvidia wanted the software to reference its Nvidia GeForce RTX graphics cards in the name.Using Chat With RTX, you can create a customized chatbot with an LLM to perform a wide range of tasks. At this time, the software has one pre-defined AI model that Nvidia has dubbed “Mistral,” and when the dataset is set to use the “AI model default,” Mistral is what will be used. In this mode, Chat With RTX works similar to ChatGPT, though it feels a bit less sophisticated.You can query the AI with specific questions, and it will attempt to answer using information from the web. I asked both Chat With RTX and ChatGPT a suggested sample question of “Explain what superconductors are to me like I’m five.”

(Credit: PCMag)

Both provided an answer that was too long to post here, but the Chat With RTX answer was more direct, though it seemed to ignore the “like I’m five” part. ChatGPT provided a simpler answer but did talk to me using child-appropriate vocabulary, and used a metaphor to explain the answer.Chat With RTX also supports two other types of datasets at this time. You can give it a Youtube link, and the software will then be able to answer questions posed at it, so long as there is information related to it in the video. This seemed to work quite well when I gave it the URL to PCMag’s 40th anniversary video, with Chat With RTX able to answer specific questions and summarize the video for me.

(Credit: PCMag)

You can also give the system data files in the form of PDF, TXT and DOC files. I tried this out with two separate datasets. First, I supplied it with a few free-to-download books on the kings of England and asked it follow-up questions that it was able to answer correctly. Then, I downloaded a PDF version of the graphics-card test results we’ve collected for analysis. I store this information as an Excel document, so it’s possible that this created issues for the software reading the data, as asking questions about this data did not go well.

Recommended by Our Editors

(Credit: PCMag)

First, I asked it “Which graphics card is fastest?” to which it responded the AMD Radeon RX 7900 XTX was, likely to Nvidia’s chagrin. I asked if it was sure, but it did not understand this question. I tried asking again, and this time it told me the Nvidia GeForce RTX 4090.

(Credit: PCMag)

I tried to ask it a few other questions, but its answers here were a bit jumbled, pulling from multiple pages in the PDF without providing clear answers.Future Features and ReleaseThat was the extent of my quick test-drive with the software; given the time I had with the app, I did not have a large AI-friendly database to train Chat With RTX on. But there are indications that the software will be capable of a lot more in the near future.

(Credit: Nvidia)

While asking Chat With RTX about its functions, it indicated that there was a way for the system to transcribe my words for me while I talked. It said I just needed to press a “speak” button to do this, but I couldn’t find this button nor could the system tell me where it was. This may well be a feature that hasn’t been fully implemented yet. The software also has support for more than 100 languages and has options for doing advanced grammar and spell-check services, language translation, and custom workloads.

(Credit: PCMag)

Given this software is only in a beta, in version number 0.2, it’s questionable how many of these features are functional at this time. It’s likely that we’ll see a lot more from Nvidia about this software as its development continues. But for now you can download the software from Nvidia and Github if you want to try it out yourself.

Get Our Best Stories!

Sign up for What’s New Now to get our top stories delivered to your inbox every morning.

This newsletter may contain advertising, deals, or affiliate links. Subscribing to a newsletter indicates your consent to our Terms of Use and Privacy Policy. You may unsubscribe from the newsletters at any time.