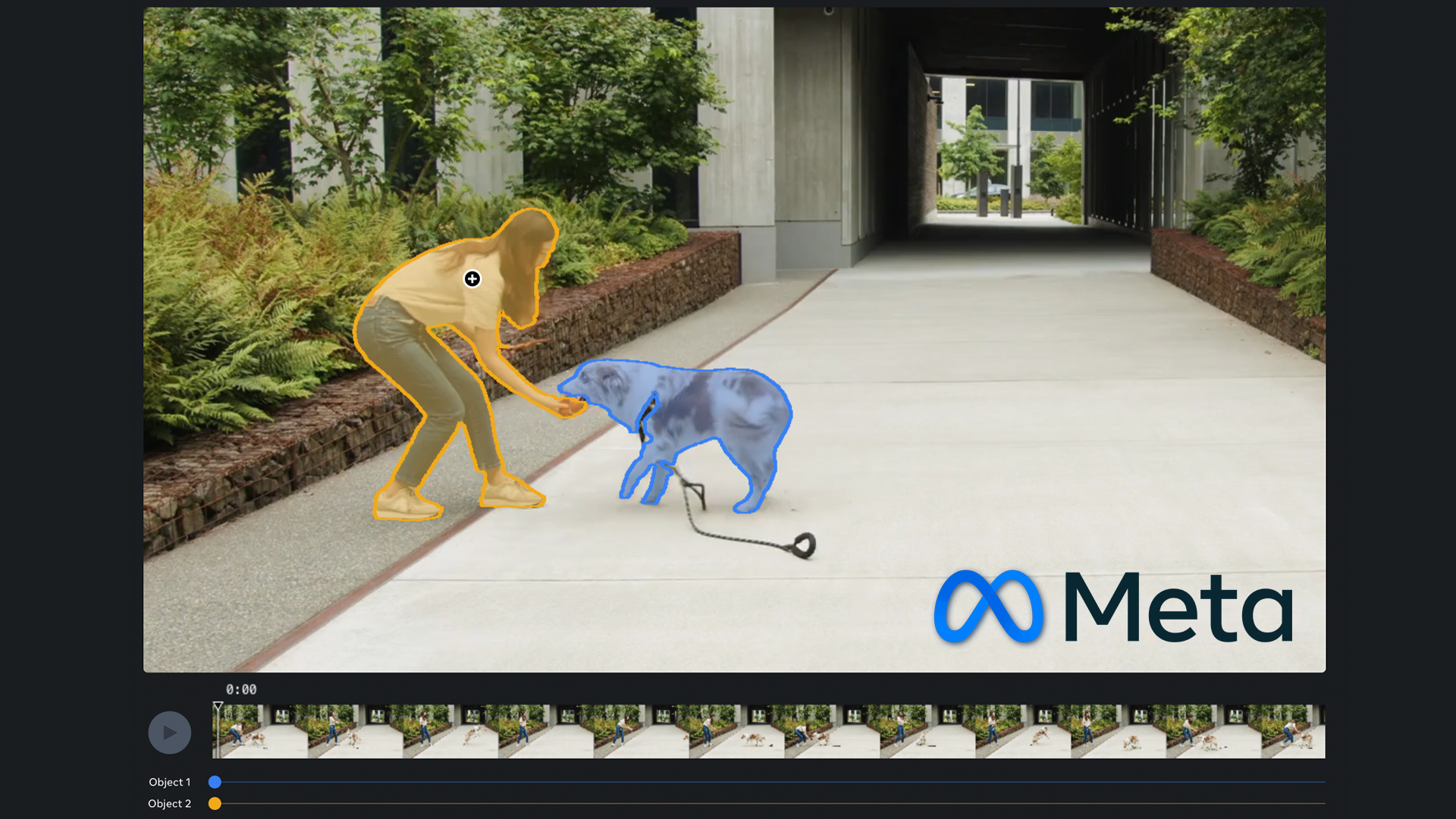

Meta has recently introduced SAM 2. Building on the takeaways of its predecessor, the new AI model is capable of segmenting various objects in images and videos and tracking them almost in real time. This next generation of Segment Anything Model (or SAM) has lots of potential applications, from item identification in AR to fast and simple creative effects in video editing. SAM 2 by Meta is an open-source project and is also available as a web-based demo for testing. So, what are we waiting for? Let’s try it out!If you use Instagram (which also belongs to the Meta family), then you are probably aware of stickers that users can create from any photo and picture with just one click. This feature, called “Backdrop and Cutouts,” uses Meta’s Segment Anything Model (SAM), which was released roughly a year ago. Since then, the company’s researchers have gone further and decided to develop a similar tool for moving images. Looking at the first tests: Well, they indeed succeeded.SAM 2 by Meta and its craftSAM 2 is a unified model for real-time promptable object segmentation in images and videos that achieves state-of-the-art performance.A quote from the SAM 2 release paperAccording to Meta, SAM 2 can detect and segment any desired object in any video or image, even for visuals that this AI model hasn’t seen previously and wasn’t trained on. Developers based their approach on successful image segmentation and considered each image to be a very short video with a single frame. Adopting this perspective, they were able to create a unified model that supports both image and video input. (If you want to read more about the technical side of SAM 2 training, please head over to the official research paper).A screenshot from the SAM 2 web-based demo interface. Image source: MetaSeasoned video editors would probably shrug their shoulders: DaVinci Resolve achieves the same with their AI-enhanced “Magic Mask” for what already seems like ages. Well, a big difference here is that Meta’s approach is, on the one hand, open-source and, on the other hand, user-friendly for non-professionals. It can also be applied to more complicated cases, and we will take a look at some below.Open-source approachAs stated in the release announcement, Meta would like to continue their approach to open science. That’s why they are sharing the code and model weights for SAM 2 with a permissive Apache 2.0 license. Anyone can download them here and use them for customized applications and experiences. You can also access the entire SA-V dataset, which was used to train Meta’s Segment Anything Model 2. It includes around 51,000 real-world videos and more than 600,000 spatio-temporal masks.How to use SAM 2 by MetaFor those of us who have no experience in AI model training (myself included), Meta also launched an intuitive and user-friendly web-based demo that anyone can try out for free and without registration. Just head over here, and let’s start.The first step is to choose the video you’d like to alter or enhance. You can either pick one of the Meta demo clips available in the library or upload your own. (This option will appear when you click “Change video” in the lower left corner). Now, select any object by simply clicking on it. If you continue clicking on the different areas of the video, they will be added to your selection. Alternatively, you can choose “Add another object”. My tests showed that the latter approach brings you better results in cases when your objects move separately – for instance, the dog and the girl in the example below.Image source: a screenshot from the demo test of SAM 2 by MetaAfter you click “Track objects,” SAM 2 by Meta will take mere seconds and show you the preview. I must admit that my result was almost perfect. The AI model only missed the dog’s lead and the ball for a couple of frames.By pressing the “Next” button, the website will let you apply some of the demo effects from the library. It could be simple overlays over objects or blurring the elements that were not selected. I went with desaturating the background and adding more contrast to it.Image source: SAM 2 by MetaWell, what can I say? Of course, the result is not 100% detail-precise, so we cannot compare it to frame-by-frame masking by hand. Yet for the mere seconds that the AI model needed, it is really impressive. Would you agree?Possible areas of application for SAM 2 by MetaAs Meta’s developers mention, there are countless areas of application for SAM 2 in the future, from aiding in scientific research (like segmenting moving cells in videos captured from a microscope) to identifying everyday items via AR glasses and adding tags and/or instructions to them.Image source: MetaFor filmmakers and video creators, SAM 2 could become a fast solution for simple effects, such as masking out disturbing elements, removing backgrounds, adding tracked-in infographics to objects, outlining elements, and so on. I’m pretty sure we won’t wait long to see these options in Instagram stories and reels. And to be honest, this will immensely simplify and accelerate editing workflows for independent creators.Another possible application of SAM 2 by Meta is to add controllability to generative video models. As you know, for now, you cannot really ask a text-to-video generator to exchange a particular element or delete the background. Imagine though, if it would become possible. For the sake of the experiment, I fed SAM 2 a clip generated by Runway Gen-2 roughly a year ago. This one:Then, I selected both animated characters, turned them into black cutouts, and blurred the rest of the frame. This was the result: LimitationsOf course, like any model in the research phase, SAM 2 has its limitations, and developers acknowledge them and promise to work on the solutions. These are:Sometimes, the model may lose track of objects. This occurs especially often across drastic camera viewpoint changes, in crowded scenes with many elements, or in extended videos. Say, when the dog in the test video above leaves the frame and then re-enters it, it’s not a problem. But if it were a wild flock of stray dogs, SAM 2 would likely lose the selected one.SAM 2 predictions can miss fine details in fast-moving objects.The results you have obtained with SAM 2 so far are considered research demos and may not be used for commercial purposes.The web-based demo is not open to those accessing it from Illinois or Texas in the U.S.You can try it out right nowAs mentioned above, SAM 2 by Meta is available both as an open-source code and as a web-based demo, which anyone can try out here. You don’t need registration for it, and the test platform allows saving the created videos or sharing the links.What is your take on Segment Anything Model 2? Do you see it as a rapid improvement for video creators and editors, or does it make you worried? Why? What other areas of applications can you imagine for this piece of tech? Let’s talk in the comments below!Feature image source: a screenshot from the demo test of SAM 2 by Meta.